ALL-IN-ONE PLATFORM

esDynamic

Manage your attack workflows in a powerful and collaborative platform.

Expertise Modules

Executable catalog of attacks and techniques.

Infrastructure

Integrate your lab equipment and remotely manage your bench.

Lab equipments

Upgrade your lab with the latest hardware technologies.

PHYSICAL ATTACKS

Side Channel Attacks

Evaluate cryptography algorithms from data acquitition to result visualisation.

Fault Injection Attacks

Laser, Electromagnetic or Glitch to exploit a physical disruption.

Photoemission Analysis

Detect photon emissions from your IC to observe its behavior during operation.

EXPERTISE SERVICES

Evaluation Lab

Our team is ready to provide expert analysis of your hardware.

Starter Kits

Build know-how via built-in use cases developed on modern chips.

Cybersecurity Training

Grow expertise with hands-on training modules guided by a coach.

ALL-IN-ONE PLATFORM

esReverse

Static, dynamic and stress testing in a powerful and collaborative platform.

Extension: Intel x86, x64

Dynamic analyses for x86/x64 binaries with dedicated emulation frameworks.

Extension: ARM 32, 64

Dynamic analyses for ARM binaries with dedicated emulation frameworks.

DIFFERENT USAGES

Penetration Testing

Identify and exploit system vulnerabilities in a single platform.

Vulnerability Research

Uncover and address security gaps faster and more efficiently.

Code Audit & Verification

Effectively detect and neutralise harmful software.

Digital Forensics

Collaboratively analyse data to ensure thorough investigation.

EXPERTISE SERVICES

Software Assessment

Our team is ready to provide expert analysis of your binary code.

Cybersecurity training

Grow expertise with hands-on training modules guided by a coach.

INDUSTRIES

Semiconductor

Automotive

Security Lab

Gov. Agencies

Academics

Defense

Healthcare

Energy

ABOUT US

Why eShard?

Our team

Careers

FOLLOW US

Linkedin

Twitter

Youtube

Gitlab

Github

"Shifting left" secures PQC implementations from physical attacks

The threat of implementation attacks

The primary threat discussed in this blog post are implementation attacks. Often underestimated as highly sophisticated, real-world cases have shown that critical assets in digital devices can be compromised with less than $1,000 worth of equipment and just a few hours to a few days of effort. This risk applies to all hardware devices, regardless of the embedded chip.

Two major techniques stand out: side-channel attacks and hardware fault injection.

-

Side-channel attacks exploit unintended information leakage—such as power consumption or electromagnetic emissions—to extract secret cryptographic keys during algorithm execution. These attacks exploit vulnerabilities in the implementation rather than in the algorithm and thus apply to all cryptographic algorithms, including AES for encryption, ECDSA for signatures, and even the latest post-quantum algorithms. One notable case involves an Apple chip.

-

Hardware fault injection deliberately disrupts a chip’s operation, potentially bypassing critical security mechanisms. A common example is bypassing signature verification in a bootloader, as seen in an attack on a Mediatek chip.

The challenge of verifying protections

To defend against implementation attacks, chip manufacturers and embedded software developers must implement robust countermeasures. These protections rely on a mix of mathematical principles and secure coding techniques to either prevent attacks or make them significantly harder to execute.

However, validating these protections is far from straightforward, as implementation attacks exploit the interplay of hardware and software behavior and thus can only be really validated by running the final software on the final hardware.

Since implementation attacks involve direct hardware manipulation, testing them requires specialized labs with advanced instrumentation. This real-world verification process is not only expensive but also highly disruptive—especially if a vulnerability is found late in development. Fixing a security flaw at this stage delays time to market and significantly increases costs, as it may require partial or full re-engineering. In the worst case the chip has to be re-manufactured imposing a 12 months time-to-market delay and millions of dollars of manufacturing costs.

A better approach: early-stage verification

It becomes immediately clear that verification as early in the development cycle as possible is desirable to minimize the impact of fixing vulnerabilities. Consequently, by integrating security testing before the hardware is finalized, weaknesses can be detected and fixed sooner, reducing both costs and delays while ensuring stronger protection against implementation attacks.

However, the overwhelming majority of applications are developed without the ability to change the underlying hardware easily. Thus, in our first blog post we focus on the software development cycle and we will visit the hardware development cycle in a follow up blog post.

The software development cycle

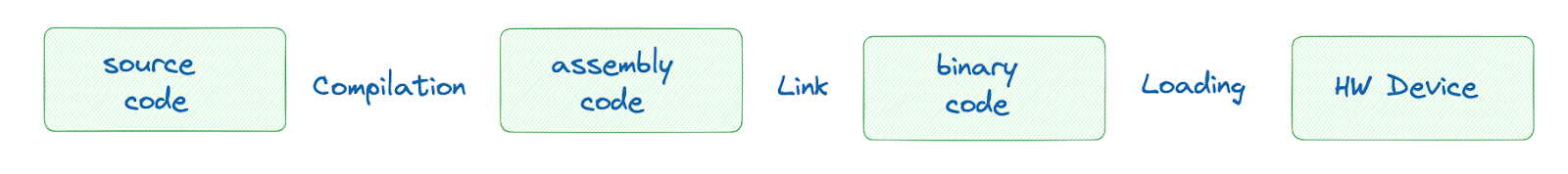

The software development and deployment cycle is a multi-stage process that transforms high-level code into a program that can be executed on hardware.

Tasked with the implementation of specific algorithms or functionality, developers write source code in a high-level language, like C, Rust, or Go. This source code is then compiled, where a compiler translates it into assembly code - a low-level representation of machine instructions tailored to the target's processor architecture. The linker combines this assembly code with other compiled modules and libraries, resolving symbolic references to create a unified binary executable. This binary code contains machine code that is directly interpretable by the target processor. In the loading phase, the operating system's loader brings the binary into memory, setting up the execution environment. Finally, the software is executed on the hardware device.

Software protections start at the code level. This requires specific code implementation to mitigate or prevent the risk:

-

Cryptographic algorithms must be designed to prevent secret key leakage. Intermediate variables should be handled in a way that prevents attackers from linking them to an estimation of the secret key through side-channel analysis.

-

Sensitive code must include integrity checks to ensure that no unauthorized modifications have been made during execution. These verifications help detect and prevent tampering, ensuring that the code remains intact throughout processing.

Software developers must ensure that security protections are both effective and properly implemented. In other words, they need to validate the logic behind these defenses and conduct dedicated tests to confirm their reliability.

However, this presents a unique challenge. Code protections against implementation attacks often resemble unprotected code in functionality:

-

Side-channel protections do not alter the cryptographic operation’s output, making it difficult to detect implementation flaws through standard testing.

-

Fault injection countermeasures often involve additional checks that remain dormant during normal execution, only triggering under attack conditions.

Because of this, traditional testing methods are not enough. Specialized validation approaches are required to ensure these protections truly work. This requires dedicated tooling able to validate the leakages and stress the code against disruptions. This step must be approached with a worst-case scenario mindset. In other words, the validation process should assume ideal hardware leakage conditions, free of noise, and consider the most favorable fault injection scenarios for an attacker.

To keep this process manageable and avoid excessive complexity, a clear fault model policy should be established. This policy should define key parameters, such as:

- The level of fault granularity (byte-level or bit-level).

- Specific forced logical values (e.g., 0x00 or 0xFF).

- Whether instructions can be skipped or manipulated.

By defining these parameters early, developers can ensure a structured and efficient approach to evaluating security protections against implementation attacks.

Once implemented, it is crucial to prevent regressions that could weaken or remove these protections during development cycles.

Some security measures, particularly those designed to counter implementation attacks, can be inadvertently disabled by compiler optimizations. Without proper safeguards, critical protections might be stripped away, leaving the system vulnerable.

To mitigate this risk, continuous validation and automated security checks should be integrated into the development workflow, ensuring that protections remain intact throughout updates and optimizations. A hardware lab is a great addition to the ongoing activities and allows to conduct the final verification on the hardware.

Our solution

Leveraging emulation

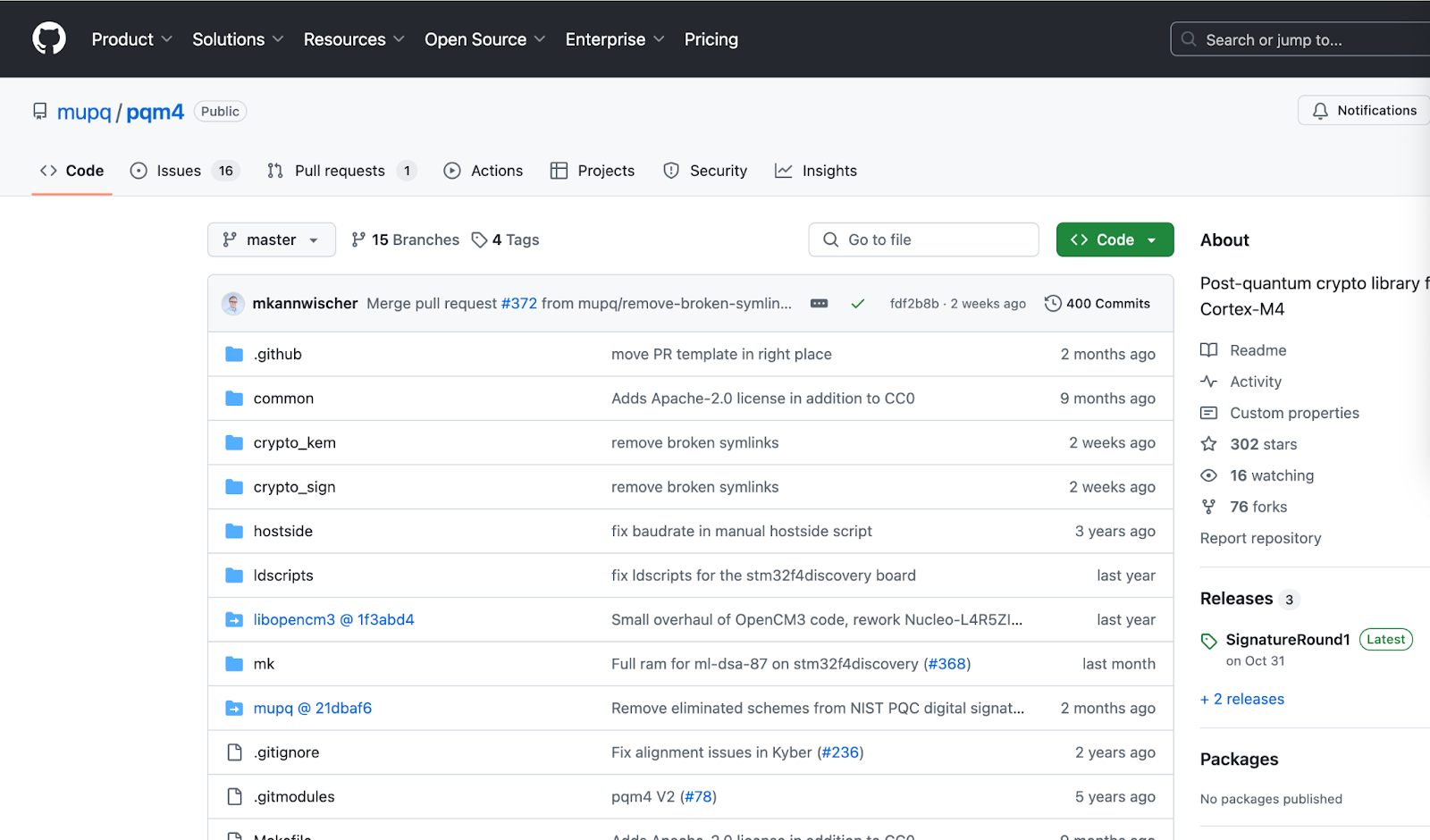

We started with a Kyber public implementation in C language.

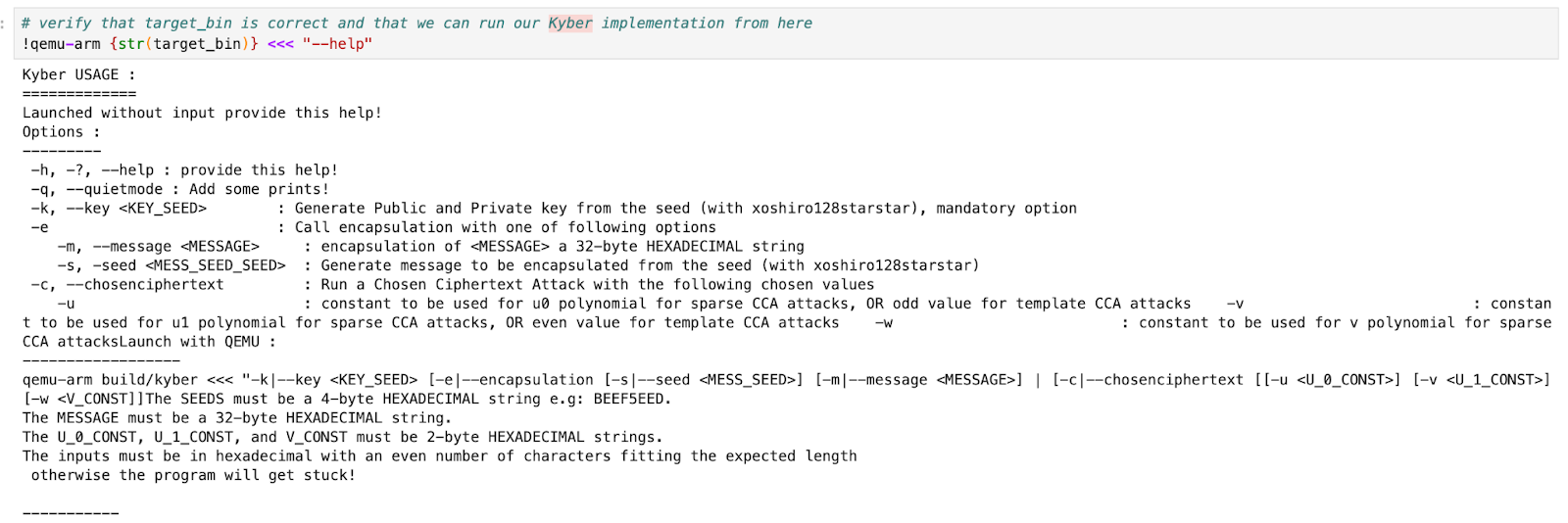

The code is fully functional and not particularly protected against side-channel attacks. Our first task was to compile it with a STM32 target generating a binary. The same binary could be loaded onto a hardware device. But our aim was to execute it with QEMU emulation framework:

Once in place, we leveraged eShard’s QEMU-based engine, in either esDynamic and esReverse platforms, to start tracing. This engine runs the actual compiled binary in an emulated environment, making it possible to capture detailed execution traces without waiting for physical hardware.

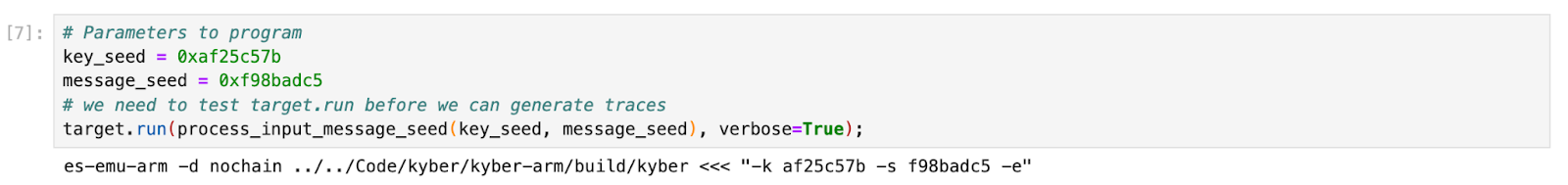

To manage this, we’ve implemented a caller function, in order to feed the Kyber implementation with a key and a message seed. The execution was quickly successful:

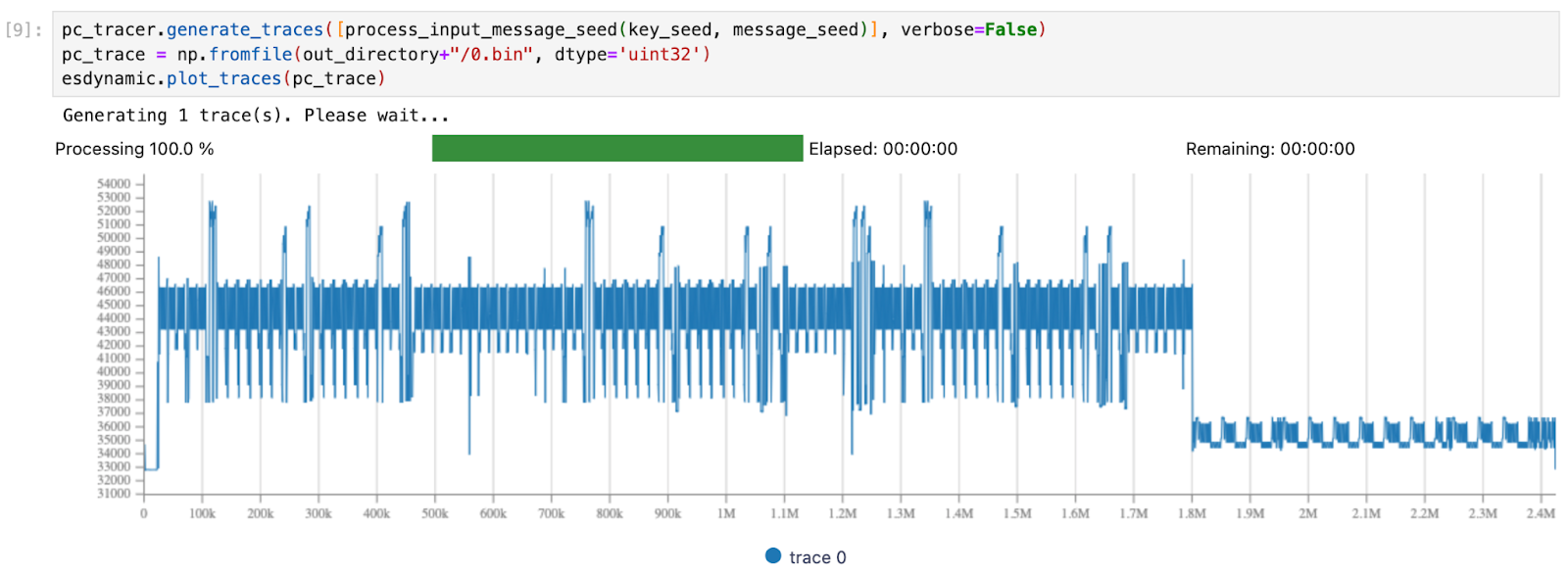

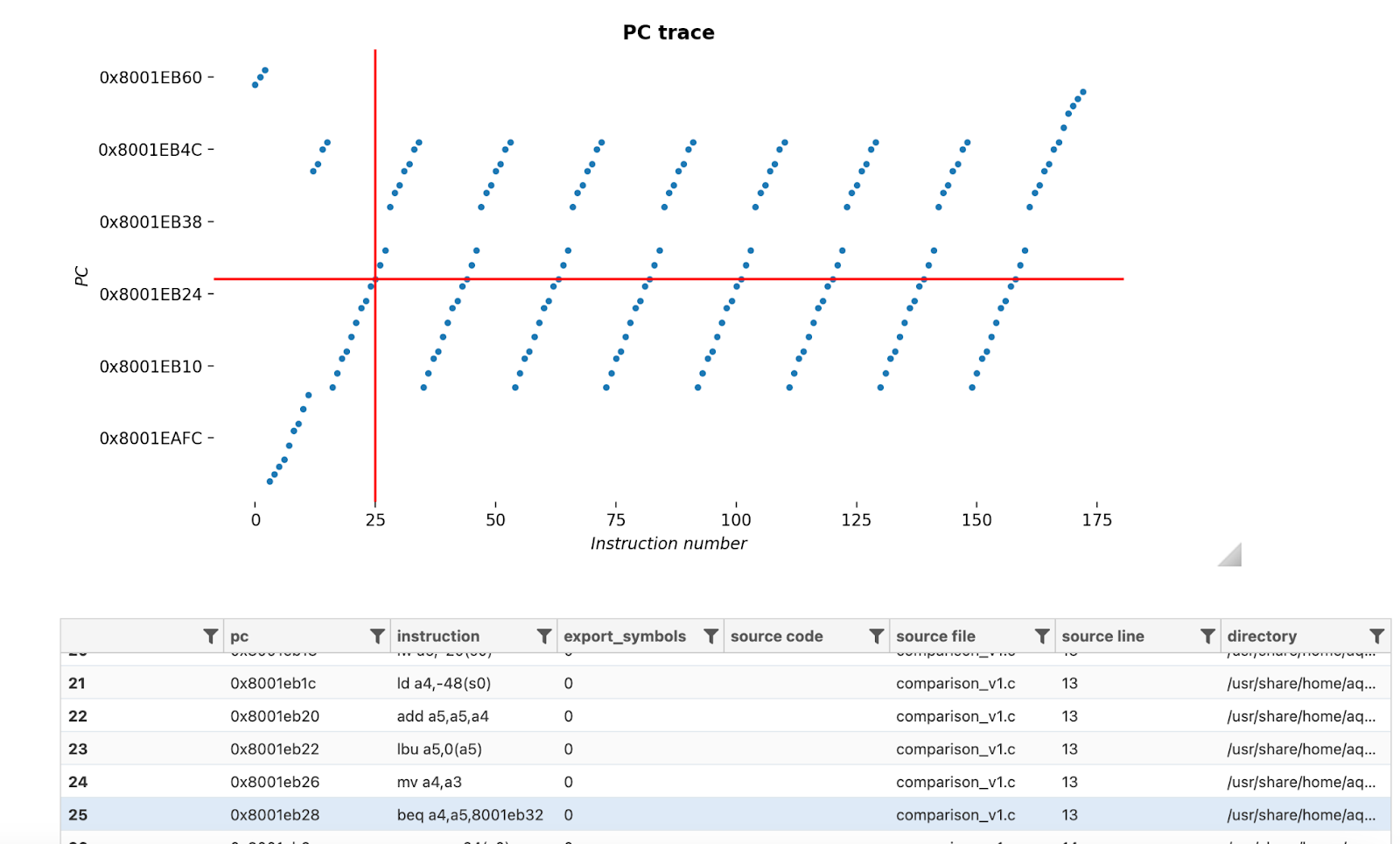

At this stage, the tracing requires defining which information is valuable to trace. Usually, a first move is to trace the program counter to have a global execution overview:

It shows that any single execution counts 2.4 million points. Reducing this would make our further analyses much more performant.

Optimizing the analysis

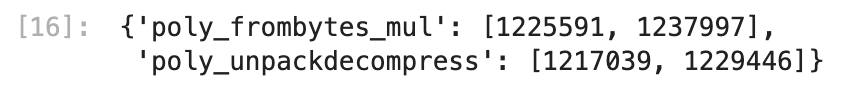

Since we have the source code, all symbols are available to us. We can target a specific function. On a Kyber implementation, the operations after the NTT transformation is a relevant side-channel target. A quick look at the code gave us the relevant instructions index:

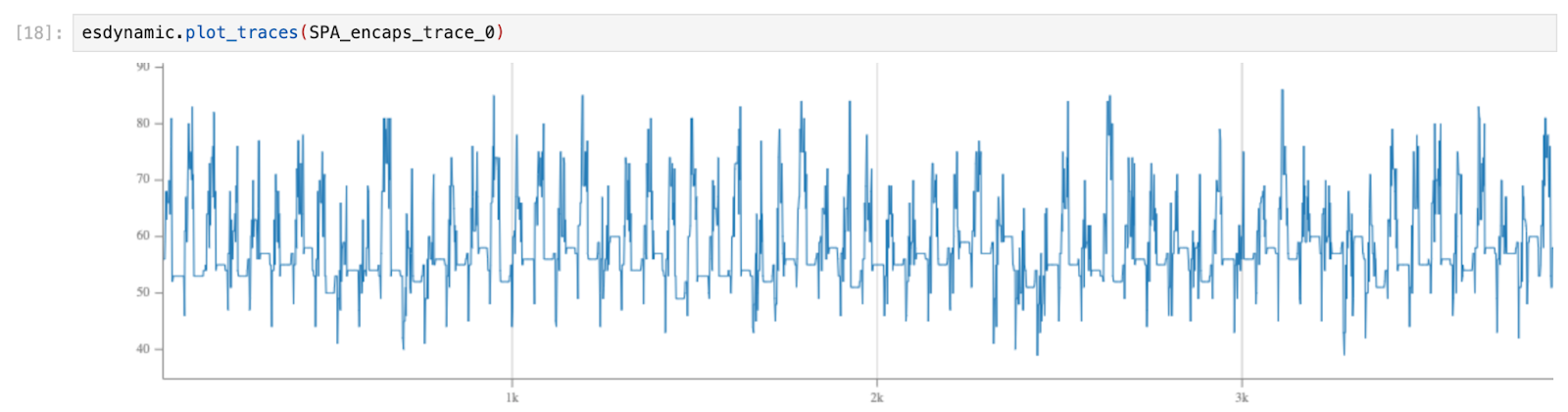

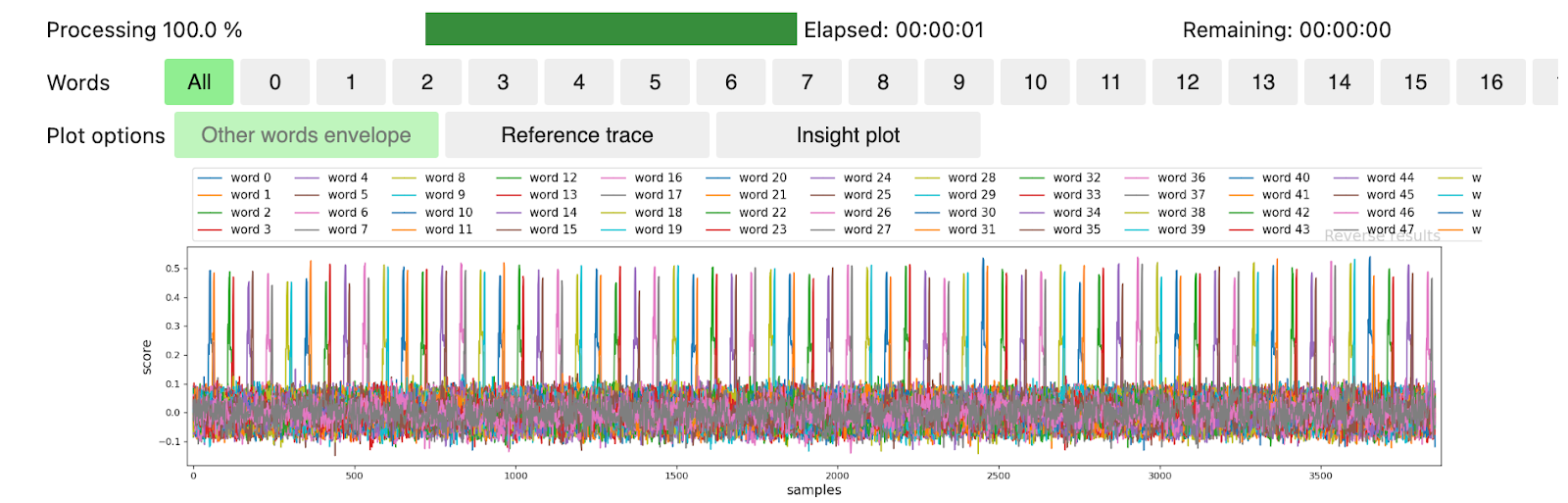

It was then possible to trace register values when the target function was executed. After a look at the generated assembly code, our choice was to trace the following registers ["r4","r5","r6","r7","r9","r11"] and to combine their hamming weights together. It gave a data trace of less than 4,000 points:

Generating a dataset and analyze

From there it is possible to generate a dataset with variable inputs. It takes only 10 seconds to generate 1,000 traces with the optimized tracer on our multi core CPU. From this point, it is possible to validate the code resistance to different attacks. At eShard, we have developed a catalogue of such attacks in the PQC specialized module. Or it is possible to test whether no leakage is found, either by a T-Test or a specific correlation of a value. This is exactly what we did on the generated dataset, confirming the non-protection. Indeed, this graph shows how each word of the secret signs when it is handled in the multiplication made in the NTT representation:

Fault injection, leveraging a faulter engine

In the same vein, the software execution can be tested against the fault injection. An example was given in a blogpost targeting a public code from Arm. The tool is flexible to let the security evaluators define the fault injection parameters in line with their policy:

- The fault model: flip bit, force a register or a memory value

- The fault location: a register, a memory location

- The success criteria: what makes a fault injection a success

Systematic testing can be implemented to validate a specific function: it is possible to execute many times the same piece of code testing many attack variants, such as targeting each instruction of a function with a chosen fault model and location.

The testing routine can be resistant to recompilation, since the test campaign can be configured with a specific symbol or label.

Exploiting the specialized testing tool

With this capability in hands, the developer has all control to generate short cycles, from a code to a dataset. It takes less than one minute when everything is set up. And it is possible to improve the code and manage verifications along the development.

On top of that, the same capability can be integrated into a CI/CD pipeline simply by specifying a specific success criteria. This can be either a T-Test absolute value staying below a threshold, e.g. 4.5, or a verification that no suspicious correlation emerges.

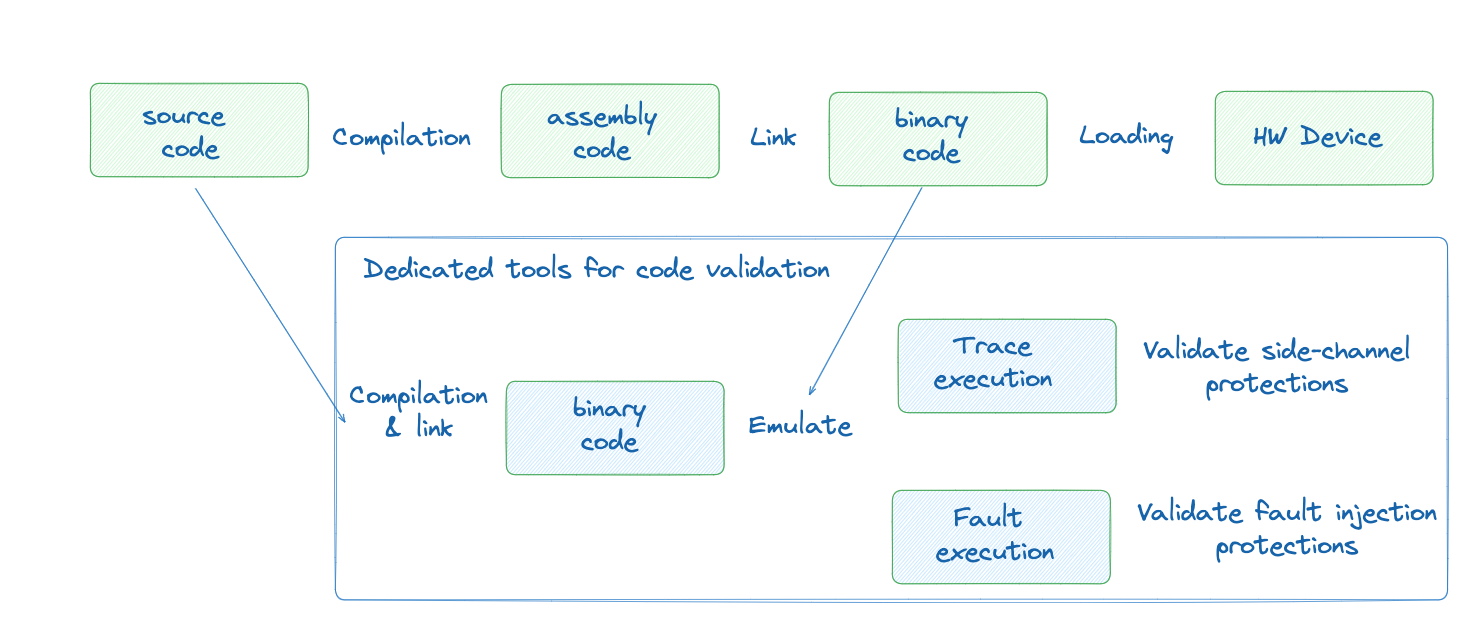

The following diagram shows where the testing capability can be integrated in the development cycle:

This shows that security verifications are possible already during the development process.

Root Cause Analysis

When a defect is identified, quickly analyzing its root cause is critical. This challenge becomes even greater in post-silicon implementation attacks, where access to runtime execution is limited and thus root cause analysis is orders of magnitude harder than in debug mode.

The testing capabilities described in this post offer powerful tools to overcome this limitation. By gaining access to runtime data, it becomes possible to trace a leakage or fault back to the exact instruction responsible. From there, disassembly techniques help pinpoint the vulnerability in the original code.

In the example below, every successful fault injection was mapped to its corresponding instruction, enabling security evaluators to trace the issue back to the code and apply the necessary fixes.

Integrate security from day one

The development of secure software is a multi-stage process that includes requirements gathering, architecture definition, design, coding, and testing. To maximize efficiency and minimize costs, security must be incorporated from the start into each stage. Security objectives should be established as early as possible, based on the intended level of security and the capabilities of potential attackers—whether hobbyists, major organizations, or nation-state actors.

Define security profiles early

At PQShield, attacker capabilities are mapped to three product security profiles, which then guide the entire development process:

- Cloud grade protects against remote attacks.

- Edge grade adds protection against attackers with basic attack potential, for instance script kiddies using cheap equipment.

- Government grade protects against attackers with high attack potential, for instance world-class experts with a fully equipped attack lab.

Once these profiles are established, they must be verified continuously throughout development.

Create a comprehensive security test plan

Effective testing requires a security test plan that evolves with the project. In Side-Channel Analysis (SCA), sensitive data is identified and tested for leakage with methods such as T-Tests or Correlation Power Analysis (CPA). For fault injection, code sections critical to security—like signature verification or cryptographic operations—are pinpointed. Test complexity is matched to assurance goals: single fault tests or standard SCA for low-cost threats, more intricate designs such as higher-order attacks for high assurance.

Automate testing in the CI/CD pipeline

Central to robust automated testing is a comprehensive test suite created by SCA or FI experts. The suite covers every test in the plan, runs seamlessly in the CI/CD pipeline, and applies clear pass/fail criteria (for example, a T-Test peak greater than 4.5 denotes failure). When a test fails, root-cause analysis is performed, the vulnerability is fixed, and tests are repeated until all issues are resolved. The suite is designed for maintainability and scalability so new tests can be added as the software evolves and new threats emerge.

Continuous validation in practice

PQShield maintains significant server capacity and measurement bandwidth to evaluate its products around the clock. PQC software libraries are continually tested for memory safety and constant-time execution across hardware ranging from Cortex-M microcontrollers to Intel Xeon servers. In 2024, this process uncovered and disclosed a compiler-introduced vulnerability in several open-source ML-KEM implementations. The PQC hardware portfolio undergoes equally rigorous testing, including fuzzing, timing analysis, and resistance to SCA and FI attacks at varying complexity levels.

Adhering to these guidelines ensures predictable security outcomes. Continuous testing provides verifiable evidence for customers and empowers external security labs to confirm security claims efficiently.

Conclusion

Implementation attacks such as side-channel leaks and fault injection are now cheap, fast, and effective. They can break almost any device, no matter which chip is inside, and they strike long before traditional lab testing even begins.

Moving security checks to the start of the project solves that timing problem. When tests run in the software stage, weak points are found and fixed while changes are still low-cost.

Our QEMU-based engines on the esDynamic and esReverse platforms give developers that early view. They run the real binary, collect targeted traces, inject scripted faults, and point directly to the instruction that fails. Analysts can spin up thousand-trace datasets in seconds, explore new attack ideas, and trace root causes without guesswork.

The same scripts sit in CI/CD. Every build reruns the tests, applies clear pass-fail rules, and blocks a merge if countermeasures regress. This keeps protections alive even when aggressive compiler optimizations try to strip them away.

The approach is already proven. PQShield’s continuous runs exposed a compiler bug that weakened several ML-KEM libraries and helped patch them upstream before shipping hardware.

Shift left, automate the checks, follow a clear test plan, and you gain a predictable, verifiable security posture that stands up to real-world attacks.